Create time series of SciGraph article data¶

For articles published by members of the MPG (after 1945), we create rolling time windows of three years and treat all publications of this time slice as one unit.

For each time slice, we create one network file in the mulilayer pajek format, as defined for the infomap algorithm. Nodes are authors (level 1) and publication (level 2), while ngrams of publication titles are level 3. For each publication, we link all author nodes to the publication node. These are inter-layer edges. Furthermore, we link all author nodes among each other, which are the intra-layer edges. In addition, each publication is linked to the ngram nodes which are derived from its title.

This format can be extended to incorporate for example citations or co-citations to create intra-layer links among the publications (i.e. in level 2).

Imports¶

import pandas as pd

import datetime

from itertools import islice

import matplotlib.pyplot as plt

from matplotlib.backends.backend_pdf import PdfPages

import os

import re

import random

from tqdm import tqdm

import numpy as np

from functools import partial

from multiprocessing import Pool, cpu_count

from itertools import combinations

import infomap

import pandas as pd

from pathlib import Path

Setup paths¶

dataPath = '../data/processedData/'

wordScorePath = dataPath + 'mpgWordScores/'

outputPath = dataPath + 'pajekNetworks/'

infomapCLUPath = dataPath + 'infomapResults/clu/'

infomapFtreePath = dataPath + 'infomapResults/ftree/'

dfMPGall = pd.read_csv(dataPath + 'MPGPubSektionen_11112020.tsv',sep='\t')

Test format of Ngram data¶

dfNgramsTest = [pd.read_csv(wordScorePath + str(sl) + '.tsv', sep='\t',header=None) for sl in (1946,1947,1948)]

dfTestNgram = pd.concat(dfNgramsTest)

dfTestNgram[dfTestNgram[0] == 'sg:pub.10.1007/bf00643799'].tail(2)

| 0 | 1 | 2 | |

|---|---|---|---|

| 5 | sg:pub.10.1007/bf00643799 | ('überhitztem', 'arsenikdampf') | 3.0 |

| 6 | sg:pub.10.1007/bf00643799 | ('kondensationsvorgänge', 'überhitztem', 'arse... | 3.0 |

Create pajek files¶

For a given time slice, we create a sub-dataframe and run the routine on it. First, dictionaries of authors and papers are build (e.g. {1:Müller, 2:10.02212/123123}). Then, for each publication, if there are more then one author, we build pairs of author and add the intra-layer links. Then, for each author we create a inter-layer link to the publication. The resulting Pajek network file has no internal time-stamp anymore.

class LinksOverTime():

"""

To keep track of nodes over time, we need a global register of node names.

"""

def __init__(self, outputPath, scorePath, dataframe, debug=False, reCreate=False, windowSize=1):

self.dataframe = dataframe

self.outpath = outputPath

self.scorepath = scorePath

self.years = sorted(self.dataframe.year.unique())

self.nodeMap = {}

self.debug = debug

self.recreate = reCreate

self.windowSize = windowSize

def _window(self, seq):

"Returns a sliding window (of width n) over data from the iterable"

" s -> (s0,s1,...s[n-1]), (s1,s2,...,sn), ... "

n = self.windowSize

it = iter(seq)

result = tuple(islice(it, n))

if len(result) == n:

yield result

for elem in it:

result = result[1:] + (elem,)

yield result

def _createSlices(self):

slices = [x for x in self._window(self.years)]

return slices

def getLinks(self, sl):

debug = self.debug

slyear = sl[0]

if debug:

print(f'Slice: {slyear}')

dataframe = self.dataframe[self.dataframe.year.isin(sl)]

dfNgramsList = [pd.read_csv(self.scorepath + str(slN) + '.tsv', sep='\t',header=None) for slN in sl]

ngramdataframe = pd.concat(dfNgramsList)

ngramdataframe = ngramdataframe[ngramdataframe[0].str.startswith('sg:')]

authors = [x for x in set([x for y in dataframe.authors.fillna('None').str.split(';') for x in y]) if x]

pubs = dataframe.pubID.fillna('None').unique()

ngrams = ngramdataframe[1].unique()

if self.nodeMap.values():

currentMax = max(self.nodeMap.values())

else:

currentMax = 0

for authorval in authors:

if not self.nodeMap.values():

self.nodeMap.update({authorval:1})

else:

if authorval not in self.nodeMap.keys():

self.nodeMap.update({authorval:max(self.nodeMap.values()) + 1})

for pubval in list(pubs):

if pubval not in self.nodeMap.keys():

self.nodeMap.update({pubval: max(self.nodeMap.values()) + 1})

for ngramval in list(ngrams):

if ngramval not in self.nodeMap.keys():

self.nodeMap.update({ngramval: max(self.nodeMap.values()) + 1})

diffPubs = [x for x in ngrams if x not in pubs]

if diffPubs:

if debug:

print(f'{len(diffPubs)} missing Publications found, of {len(pubs)}')

if debug:

print('\tNumber of vertices (authors, papers and ngrams) {0}'.format(max(seld.nodeMap.values())))

filePath = self.outpath + 'multilayerPajek_{0}.net'.format(slyear)

if os.path.isfile(filePath):

if not self.recreate:

return 'exists'# raise FileExistsError('Please delete or move the old file.')

with open(filePath,'a') as file:

file.write("# A network in a general multiplex format\n")

file.write("*Vertices {0}\n".format(max(self.nodeMap.values())))

for x,y in self.nodeMap.items():

tmpStr = '{0} "{1}"\n'.format(y, x)

if tmpStr:

file.write(tmpStr)

file.write("*Multiplex\n")

file.write("# layer node layer node [weight]\n")

if debug:

print('\tWriting inter-layer links to file.')

for idx, row in dataframe.fillna('').iterrows():

authors = row['authors'].split(';')

paper = row['pubID']

if paper not in self.nodeMap.keys():

print(f'Cannot find {paper}')

ngramsList = ngramdataframe[ngramdataframe[0] == paper]

paperNr = self.nodeMap[paper]

if len(authors) >= 2:

pairs = [x for x in combinations(authors, 2)]

for pair in pairs:

file.write('{0} {1} {2} {3} 1\n'.format(1, self.nodeMap[pair[0]], 1, self.nodeMap[pair[1]]))

for author in authors:

try:

authNr = self.nodeMap[author]

file.write('{0} {1} {2} {3} 1\n'.format(1,authNr,2,paperNr))

except:

pass

for ngramidx, ngramrow in ngramsList.iterrows():

try:

ngramNr = self.nodeMap[ngramrow[1]]

weight = ngramrow[2]

file.write('{0} {1} {2} {3} {4}\n'.format(2, paperNr, 3, ngramNr, weight))

except:

raise

return 'done'

def run(self):

for sl in tqdm(self._createSlices()):

self.getLinks(sl)

return 'Done'

Test routine¶

createPajek = LinksOverTime(outputPath, wordScorePath, dfMPGall)

createPajek.getLinks(createPajek._createSlices()[0])

Generate pajek files¶

createPajek = LinksOverTime(outputPath, wordScorePath, dfMPGall)

createPajek.run()

100%|██████████| 74/74 [2:09:52<00:00, 105.30s/it]

'Done'

Use infomap algorithm on slices¶

The infomap algorithm can be used directly on the pajek files. Output is written in cluster (.clu) and flowtree format (.ftree) For a tutorial on the algorithm, have a look at this PDF by the developers.

pajekfiles = sorted([x for x in os.listdir(outputPath) if x.endswith('.net')])

researchphase = pajekfiles[:-14]

infomult = infomap.Infomap("-N1 -imultilayer -fundirected")

def calcInfomap(inFile, debug=False):

idx = inFile.split('_')[1].split('.')[0]

infomult.readInputData(outputPath + 'multilayerPajek_{0}.net'.format(idx))

infomult.run()

infomult.writeClu(infomapCLUPath + "slice_{0}.clu".format(idx))

infomult.writeFlowTree(infomapFtreePath + "slice_{0}.ftree".format(idx))

if debug:

print(f"Clustered in {infomult.maxTreeDepth()} levels with codelength {infomult.codelength}")

print("\tDone: Slice {0}!".format(idx))

return

# infom = calcInfomap(1956)

ncore = cpu_count() - 2

with Pool(ncore) as p:

max_ = len(researchphase)

with tqdm(total=max_) as pbar:

for i, _ in enumerate(p.imap_unordered(calcInfomap, researchphase)):

pbar.update()

100%|██████████| 60/60 [01:09<00:00, 1.16s/it]

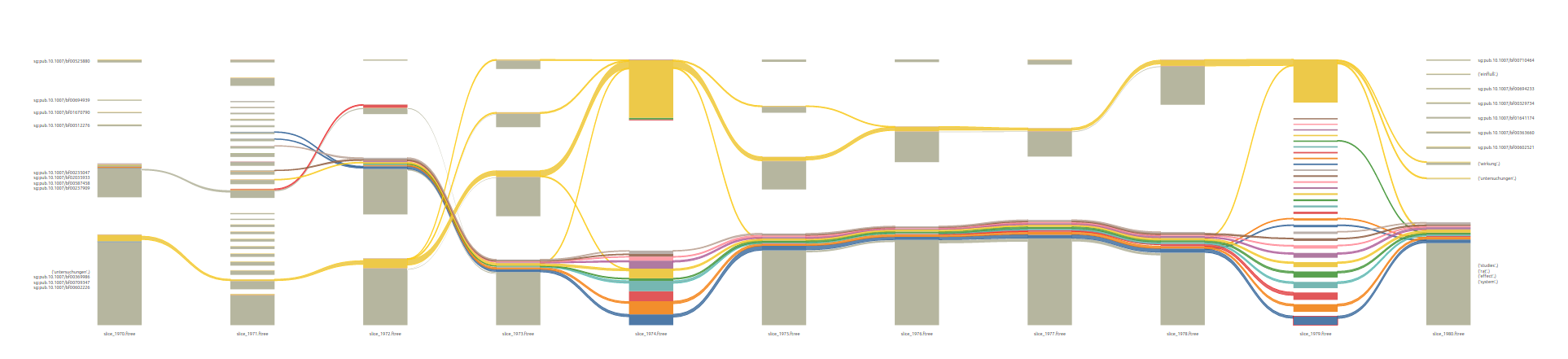

The resulting files in ftree format can be used for visualizations, e.g. on https://www.mapequation.org/alluvial/.